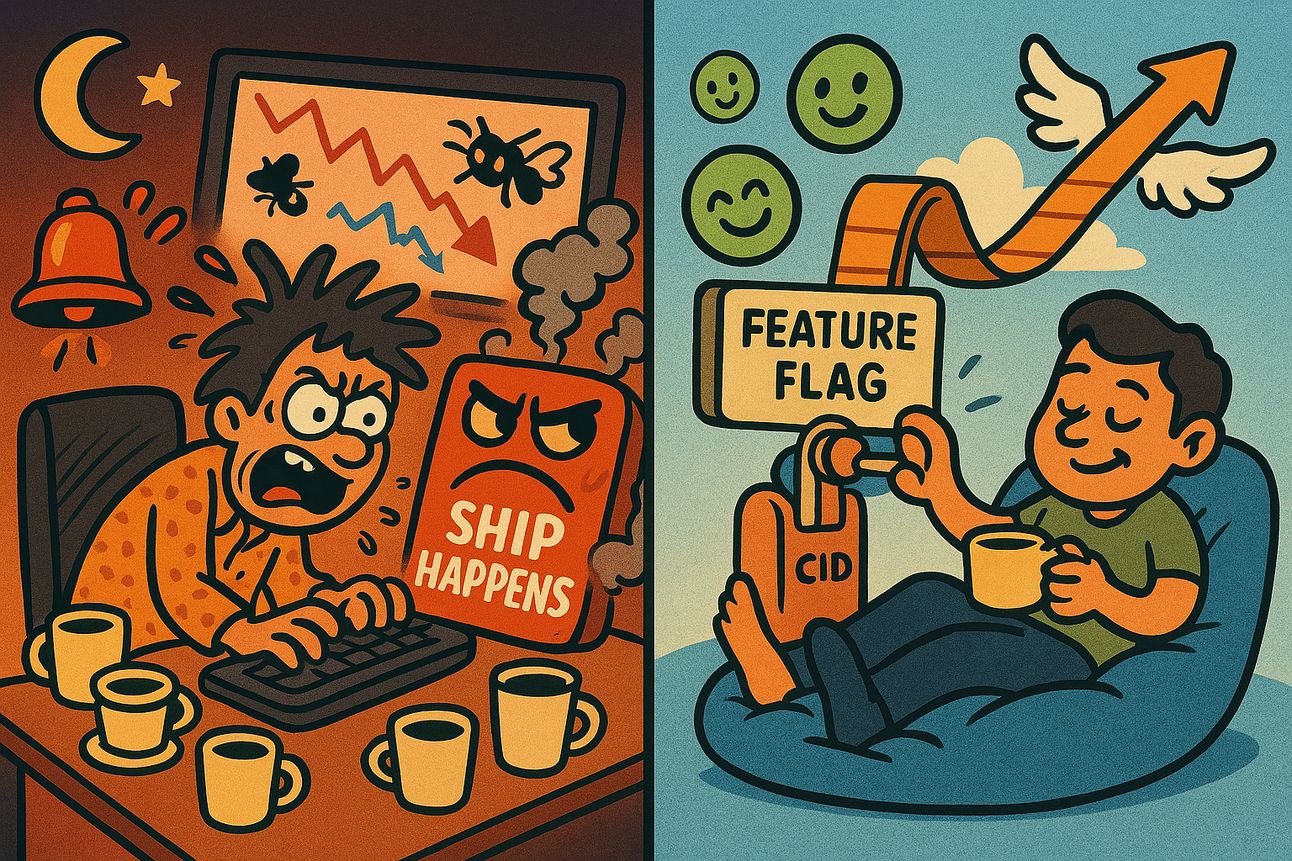

The way most teams deploy code is fundamentally broken. The tight coupling of code deployments with feature releases creates unnecessary risk while traditional branching strategies add complexity that slows teams down. There's a better way—one that dramatically improves both velocity and stability without the midnight panics and merge conflicts that have become normalized in our industry.

The Midnight Deploy That Broke Everything

It was 11:30 PM on a Thursday when my phone erupted with Slack notifications. Our team had just deployed a major feature update to production, and chaos ensued. The dashboard showed a 40% drop in transactions. Customer support tickets flooded in. Our third largest client was threatening to cancel their contract.

"We need to roll back" the Director messaged, his anxiety palpable even through text.

But we couldn't—at least not easily. The deployment included database migrations that couldn't be reversed without data loss. Our feature branch had been merged into main, deployed, and immediately released to all users in one monolithic operation. There was no way to turn off just the problematic component.

"Let's hotfix it" someone suggested.

By 3 AM, bleary-eyed developers had pushed a fix that stopped the bleeding, but the damage was done. We'd lost tens of thousands in revenue and burned enormous social capital with our customers.

The next morning, our post-mortem revealed an uncomfortable truth: the feature worked perfectly in our staging environment. The issue only manifested in production where real user data and traffic patterns exposed an edge case we'd never considered.

This scenario plays out in companies every day. It's not because teams are incompetent—it's because they're working within a deployment paradigm that assumes every commit pushed to production must immediately be exposed to users. This fundamental assumption is wrong, dangerous, and completely avoidable.

The Deployment-Release Coupling Fallacy

Most engineering organizations operate with deployment and release fused together—like conjoined twins that desperately need separation surgery.

A deployment is the process of moving code from a development environment to production. It's a technical operation.

A release is the act of exposing functionality to users. It's a business decision.

When these processes are coupled, every deployment triggers an immediate release, creating a high-stakes environment where code changes are simultaneously technical and business events. This coupling forces teams to:

Deploy less frequently to reduce risk

Create complex branch strategies to isolate changes

Rely heavily on pre-production testing (which never fully replicates production)

Roll back entire deployments when a single feature fails

The result is slower development cycles, increased risk, and developer burnout. But it gets worse. The tools we use to manage this complexity—particularly our Git workflows—often exacerbate the problem instead of solving it.

Traditional Git Workflows: A Recipe for Merge Hell

GitFlow was revolutionary when it emerged. It gave teams a structured approach to managing the inherent complexity of multi-developer software projects. But somewhere along the way, we confused process complexity with process effectiveness.

Most teams today use some variant of feature branching, where developers:

Create a branch from main for each feature

Develop in isolation on that branch

Open a Pull Request when ready

Wait for code review

Address feedback

Eventually merge to main

Release on some cadence (often tied to sprints)

This workflow creates several critical problems:

Integration Delay: Changes sit in isolation branches for days or weeks before getting merged, increasing the likelihood of painful merge conflicts.

Review Bottlenecks: Large, monolithic PRs are hard to review, leading to superficial "LGTM" approvals or lengthy delays.

Context Switching: Developers must pause work while waiting for reviews, then context-switch back when feedback arrives.

Synchronization Issues: Long-lived branches drift further from main, making them progressively harder to integrate.

I once worked on a team that had so fully embraced GitFlow that release days became all-hands emergencies. Merge conflicts had conflicts within conflicts, like an Inception movie of code integration. During one particularly painful release, it took three senior engineers nine hours to resolve the conflicts between our "development" and "release" branches. Nine hours of highly-skilled developer time wasted on what was essentially bureaucratic overhead.

This is madness, and deep down, WE ALL KNOW IT. Yet we persist because alternative approaches seem risky or unfamiliar.

Trunk-Based Development: The High-Performance Engine

Trunk-based development (TBD) is fundamentally simple: all developers work on a single branch (usually called main or trunk), and they integrate changes frequently—often multiple times per day.

When I first introduced TBD at a previous company, the immediate reaction was skepticism bordering on hostility:

"We'll break the build constantly!"

"What about half-finished features?"

"How do we maintain stable releases?"

These concerns are valid but addressable. Here's how successful organizations make TBD work:

Small, Frequent Commits: Changes are broken down into minimal, independently valuable increments that can be integrated without breaking functionality.

Comprehensive Automated Testing: Fast, reliable test suites validate changes before they hit the shared branch.

Feature Flags: Code for in-progress features is deployed but not activated until ready (more on this shortly).

Observability and Monitoring: Real-time insights detect issues quickly in production.

Adopting TBD transforms not just your codebase but your entire engineering culture. Teams that embrace it typically see:

80% reduction in time spent resolving merge conflicts

60% increase in deployment frequency

30-50% reduction in lead time from commit to production

Significant improvements in developer satisfaction

However, TBD alone doesn't solve all the problems, particularly around code review and feature development. That's where diff stacking enters the picture.

Diff Stacking: Breaking Down Mountains Into Molehills

I spent a handful of years at Meta, and if you ask any Engineer what was the best thing about working at Meta, I guarantee you the vast majority of them will tell you "diff stacking" and "phabricator."

Diff stacking (or "stacked PRs" in GitHub terminology) is a workflow where developers organize related changes into a sequence of dependent diffs, each building on the previous one.

Imagine you're implementing a new user authentication system. Instead of creating one massive PR with everything, you create a stack of smaller, reviewable pieces:

First diff: Database schema changes

Second diff: Core authentication service implementation

Third diff: API endpoints

Fourth diff: Frontend integration

Each diff is reviewed and merged sequentially. This approach offers several powerful advantages:

Reduced Cognitive Load: Reviewers focus on smaller, more coherent changes instead of trying to understand a complete feature at once.

Parallel Work and Review: You can continue building on top of your submitted changes while waiting for reviews of earlier diffs.

Faster Feedback Cycles: Get input on foundational parts of your implementation before completing the entire feature.

Easier Debugging: When things break, it's much simpler to identify which specific change caused the issue.

At one company I consulted with, adopting diff stacking reduced their average review time from 2.5 days to just 4 hours. Pull requests that once took a week to merge now flowed through in a single day. The impact on team velocity was so dramatic and immediate that the CEO—who initially choked on his coffee when he saw my hourly rate—later joked that I should've charged double. Nothing makes executives happier than feeling like they've accidentally underpaid for something that actually worked.

The challenging part is that most Git workflows and tools weren't built with diff stacking in mind. GitHub, while dominating the space, doesn't natively support this model well. That's where specialized tools come in:

Graphite: Purpose-built for stacked diffs workflow with GitHub integration

ezyang/ghstack: A lightweight open-source alternative

ReviewStack: A UI for GitHub PRs with custom support for stacked changes

But even with these tools, there's still one critical piece missing: how do we deploy code continuously while controlling when features are released to users?

Feature Flags: The Great Decoupler

Feature flags (also called feature toggles or feature switches) are conditional statements in your code that determine whether a feature is active.

In its simplest form, a feature flag looks like this:

if (isFeatureEnabled('new-authentication')) {

return ;

} else {

return ;

}

This simple pattern transforms your deployment process. Now you can:

Deploy unfinished features to production safely

Enable features for specific user segments

Roll out gradually to detect issues early

Kill problematic features instantly without rolling back code

A/B test competing implementations

When I implemented feature flags at a mid-size fintech startup, our deployment frequency increased from twice a week to 10+ times daily. More importantly, production incidents decreased by 60% because we could catch issues early and limit exposure.

The key insight is that feature flags transform binary decisions ("deploy or don't deploy") into granular controls. They're the difference between an on/off light switch and a dimmer that gives you precise control.

Modern feature flag systems like PostHog, Statsig, LaunchDarkly, Split.io, or open-source alternatives like Flagsmith provide sophisticated capabilities:

Targeting rules: Enable features based on user attributes, geolocation, or custom criteria

Percentage rollouts: Gradually increase exposure from 1% to 100%

Kill switches: Emergency controls to disable problematic features

Metrics integration: Track how features impact business and technical KPIs

But implementing feature flags requires discipline. Without proper management, they can become a source of technical debt and complexity. Successful teams treat feature flags as a first-class part of their architecture, with practices like:

Time-limited flags with automatic expiration

Regular auditing of active flags

Documentation of flag purpose and ownership

Testing of both flag states (on and off)

Production: The Only Test Environment That Matters

"But what about our staging environment?"

Teams often ask when I suggest deploying frequently to production.

Here's an uncomfortable truth: your staging environment is a pale imitation of production. It lacks:

Real user behavior patterns

Production-scale data

Actual traffic volumes and patterns

Integration complexities with third-party services

The chaos of real-world usage

I once worked with a team that spent three weeks testing a feature in staging, only to have it fail anyway in production. Their staging database had 10,000 records. Production had millions. The queries that performed beautifully in staging often enough brought production servers to their knees.

This doesn't mean staging environments are useless—they're great for initial verification. But they can never replace the validation you get from real production usage.

With feature flags, you can adopt a progressive approach:

Deploy to production behind a feature flag

Enable for internal users only

Expand to a small percentage of beta users

Monitor metrics and errors closely

Gradually increase exposure while watching for issues

Reach 100% only when confident

This approach turns releases into controlled experiments rather than binary, high-risk events. The best part is when things go wrong—and they will—you can disable the feature in seconds, not hours

The Four Pillars of Modern Deployment

Based on my experience implementing these practices across organizations of various sizes, I've identified four critical pillars for success:

1. Continuous Integration Infrastructure

Your CI pipeline needs to be fast, reliable, and informative. It should:

Run comprehensive tests on every commit

Complete within 10-15 minutes for most changes

Provide clear, actionable feedback on failures

Support parallel execution for speed

Include both unit and integration tests

Investing in CI infrastructure pays enormous dividends. Teams with robust CI spend up to 50% less time dealing with integration issues and defects.

2. Feature Flag Management System

Whether you build or buy, you need a system that:

Makes flag creation simple and consistent

Provides centralized control of flag states

Offers fine-grained targeting capabilities

Integrates with your monitoring systems

Tracks flag usage and assists with cleanup

Feature flags without proper management quickly become an unmanageable mess.

3. Trunk-Based Development Workflow

Your development workflow should:

Keep branches short-lived (hours, not days)

Encourage small, incremental commits

Make it easy to continuously rebase with main

Support diff stacking for larger features

Include automation for common tasks

The goal is to make integrating with the main branch the path of least resistance.

4. Observability and Monitoring

You can't safely deploy to production without knowing what's happening there:

Real-time error tracking and alerting

Performance monitoring at both system and user levels

Business metrics tied to technical changes

Logging that facilitates quick debugging

Dashboards that show the health of recent deployments

When something goes wrong, you need to know immediately and have the tools to diagnose quickly.

Making the Transition: A Practical Roadmap

Transitioning to this model isn't an overnight change. Based on helping multiple teams make this shift, here's a practical roadmap:

Phase 1: Lay the Foundation (1-2 Months)

Implement comprehensive automated testing

Set up or improve CI infrastructure

Start using feature flags for small, low-risk changes

Experiment with trunk-based development on a single team

Phase 2: Scale the Practices (2-3 Months)

Expand trunk-based development to more teams

Introduce diff stacking for larger features

Build or adopt a feature flag management system

Develop production monitoring capabilities

Create documentation and training materials

Phase 3: Optimize and Refine (Ongoing)

Measure deployment frequency, lead time, and stability

Continuously improve flag management to reduce technical debt

Refine CI/CD pipelines for faster feedback

Develop team-specific workflows within the broader framework

The most common mistake I see is trying to change everything at once. Start small, prove the concept, and build confidence before expanding.

The Outcome-Focused Engineer

This entire approach aligns perfectly with the Product Engineer mindset—focusing on outcomes rather than outputs. When deployments and releases are decoupled, engineers can think more clearly about the impact of their work:

Is this feature delivering value to users?

Are we measuring the right metrics?

How can we validate this change with minimal risk?

What's the fastest path to learning from real users?

The best teams I've worked with don't measure success by how many features they ship—they measure by how effectively they solve real problems while maintaining system stability.

The tight coupling of deployment and release represents a fundamental constraint that limits engineering teams' velocity and increases risk. By adopting trunk-based development, diff stacking, and feature flags, teams can break free from this constraint and develop a workflow that emphasizes:

Small, frequent integrations over long-lived branches

Incremental, reviewable changes over monolithic PRs

Controlled exposure over binary releases

Real-world validation over simulated testing

The transition isn't easy—it requires changes to tooling, processes, and most importantly, mindset. But the rewards are substantial: faster delivery, higher quality, reduced risk, and happier engineers.

The way most teams deploy code is fundamentally broken. The tight coupling of code deployments with feature releases creates unnecessary risk while traditional branching strategies add complexity that slows teams down. There's a better way—one that dramatically improves both velocity and stability without the midnight panics and merge conflicts that have become normalized in our industry.

The question isn't whether your team can afford to make these changes. It's whether you can afford not to.

If you found this valuable, subscribe below for more deep dives on engineering practices, tooling, and the human aspects of software development.